c

Basics of Orchestration

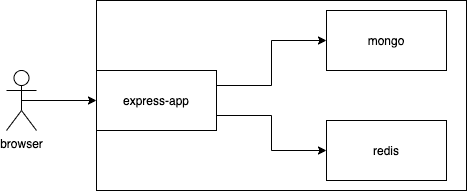

We have now a basic understanding of Docker and can use it to easily set up eg. a database for our app. Let us now move our focus to the frontend.

React in container

Let's create and containerize a React application next. We start with the usual steps:

$ npm create vite@latest hello-front -- --template react

$ cd hello-front

$ npm installThe next step is to turn the JavaScript code and CSS, into production-ready static files. Vite already has build as an npm script so let's use that:

$ npm run build

...

Creating an optimized production build...

...

The build folder is ready to be deployed.

...Great! The final step is figuring out a way to use a server to serve the static files. As you may know, we could use express.static with the Express server to serve the static files. I'll leave that as an exercise for you to do at home. Instead, we are going to go ahead and start writing our Dockerfile:

FROM node:20

WORKDIR /usr/src/app

COPY . .

RUN npm ci

RUN npm run buildThat looks about right. Let's build it and see if we are on the right track. Our goal is to have the build succeed without errors. Then we will use bash to check inside of the container to see if the files are there.

$ docker build . -t hello-front

=> [4/5] RUN npm ci

=> [5/5] RUN npm run

...

=> => naming to docker.io/library/hello-front

$ docker run -it hello-front bash

root@98fa9483ee85:/usr/src/app# ls

Dockerfile README.md dist index.html node_modules package-lock.json package.json public src vite.config.js

root@98fa9483ee85:/usr/src/app# ls dist

assets index.html vite.svgA valid option for serving static files now that we already have Node in the container is serve. Let's try installing serve and serving the static files while we are inside the container.

root@98fa9483ee85:/usr/src/app# npm install -g serve

added 89 packages in 2s

root@98fa9483ee85:/usr/src/app# serve dist

┌────────────────────────────────────────┐

│ │

│ Serving! │

│ │

│ - Local: http://localhost:3000 │

│ - Network: http://172.17.0.2:3000 │

│ │

└────────────────────────────────────────┘Great! Let's ctrl+c to exit out and then add those to our Dockerfile.

The installation of serve turns into a RUN in the Dockerfile. This way the dependency is installed during the build process. The command to serve the dist directory will become the command to start the container:

FROM node:20

WORKDIR /usr/src/app

COPY . .

RUN npm ci

RUN npm run build

RUN npm install -g serve

CMD ["serve", "dist"]Our CMD now includes square brackets and as a result, we now use the exec form of CMD. There are actually three different forms for CMD, out of which the exec form is preferred. Read the documentation for more info.

When we now build the image with docker build . -t hello-front and run it with docker run -p 5001:3000 hello-front, the app will be available in http://localhost:5001.

Using multiple stages

While serve is a valid option, we can do better. A good goal is to create Docker images so that they do not contain anything irrelevant. With a minimal number of dependencies, images are less likely to break or become vulnerable over time.

Multi-stage builds are designed to split the build process into many separate stages, where it is possible to limit what parts of the image files are moved between the stages. That opens possibilities for limiting the size of the image since not all the by-products of the build are necessary for the resulting image. Smaller images are faster to upload and download and they help reduce the number of vulnerabilities that your software may have.

With multi-stage builds, a tried and true solution like Nginx can be used to serve static files without a lot of headaches. The Docker Hub page for Nginx tells us the required info to open the ports and "Hosting some simple static content".

Let's use the previous Dockerfile but change the FROM to include the name of the stage:

# The first FROM is now a stage called build-stage

FROM node:20 AS build-stage

WORKDIR /usr/src/app

COPY . .

RUN npm ci

RUN npm run build

# This is a new stage, everything before this is gone, except for the files that we want to COPY

FROM nginx:1.25-alpine

# COPY the directory dist from the build-stage to /usr/share/nginx/html

# The target location here was found from the Docker hub page

COPY /usr/src/app/dist /usr/share/nginx/htmlWe have also declared another stage, where only the relevant files of the first stage (the dist directory, that contains the static content) are copied.

After we build it again, the image is ready to serve the static content. The default port will be 80 for Nginx, so something like -p 8000:80 will work, so the parameters of the RUN command need to be changed a bit.

Multi-stage builds also include some internal optimizations that may affect your builds. As an example, multi-stage builds skip stages that are not used. If we wish to use a stage to replace a part of a build pipeline, like testing or notifications, we must pass some data to the following stages. In some cases this is justified: copy the code from the testing stage to the build stage. This ensures that you are building the tested code.

Development in containers

Let's move the whole todo application development to a container. There are a few reasons why you would want to do that:

- To keep the environment similar between development and production to avoid bugs that appear only in the production environment

- To avoid differences between developers and their personal environments that lead to difficulties in application development

- To help new team members hop in by having them install container runtime - and requiring nothing else.

These all are great reasons. The tradeoff is that we may encounter some unconventional behavior when we aren't running the applications like we are used to. We will need to do at least two things to move the application to a container:

- Start the application in development mode

- Access the files with VS Code

Let's start with the frontend. Since the Dockerfile will be significantly different from the production Dockerfile let's create a new one called dev.Dockerfile.

Note we shall use the name dev.Dockerfile for development configurations and Dockerfile otherwise.

Starting Vite in development mode should be easy. Let's start with the following:

FROM node:20

WORKDIR /usr/src/app

COPY . .

# Change npm ci to npm install since we are going to be in development mode

RUN npm install

# npm run dev is the command to start the application in development mode

CMD ["npm", "run", "dev", "--", "--host"]Note the extra parameters -- --host in the CMD. Those are needed to expose the development server to be visible outside the Docker network. By default the development server is exposed only to localhost, and despite we access the frontend still using the localhost address, it is in reality attached to the Docker network.

During build the flag -f will be used to tell which file to use, it would otherwise default to Dockerfile, so the following command will build the image:

docker build -f ./dev.Dockerfile -t hello-front-dev .Vite will be served in port 5173, so you can test that it works by running a container with that port published.

The second task, accessing the files with VSCode, is not yet taken care of. There are at least two ways of doing this:

- The Visual Studio Code Remote - Containers extension

- Volumes, the same thing we used to preserve data with the database

Let's go over the latter since that will work with other editors as well. Let's do a trial run with the flag -v, and if that works, then we will move the configuration to a docker-compose file. To use the -v, we will need to tell it the current directory. The command pwd should output the path to the current directory for us. Let's try this with echo $(pwd) in the command line. We can use that as the left side for -v to map the current directory to the inside of the container or we can use the full directory path.

$ docker run -p 5173:5173 -v "$(pwd):/usr/src/app/" hello-front-dev

> todo-vite@0.0.0 dev

> vite --host

VITE v5.1.6 ready in 130 msNow we can edit the file src/App.jsx, and the changes should be hot-loaded to the browser!

If you have a Mac with M1/M2 processor, the above command fails. In the error message, we notice the following:

Error: Cannot find module @rollup/rollup-linux-arm64-gnuThe problem is the library rollup that has its own version for all operating systems and processor architectures. Due to the volume mapping, the container is now using the node_modules from the host machine directory where the @rollup/rollup-darwin-arm64 (the version suitable Mac M1/M2) is installed, so the right version of the library for the container @rollup/rollup-linux-arm64-gnu is not found.

There are several ways to fix the problem. Let's use the perhaps simplest one. Start the container with bash as the command, and run the npm install inside the container:

$ docker run -it -v "$(pwd):/usr/src/app/" front-dev bash

root@b83e9040b91d:/usr/src/app# npm installNow both versions of the library rollup are installed and the container works!

Next, let's move the config to the file docker-compose.dev.yml. This file should be at the root of the project as well:

services:

app:

image: hello-front-dev

build:

context: . # The context will pick this directory as the "build context"

dockerfile: dev.Dockerfile # This will simply tell which dockerfile to read

volumes:

- ./:/usr/src/app # The path can be relative, so ./ is enough to say "the same location as the docker-compose.yml"

ports:

- 5173:5173

container_name: hello-front-dev # This will name the container hello-front-devWith this configuration, docker compose -f docker-compose.dev.yml up can run the application in development mode. You don't even need Node installed to develop it!

Note we shall use the name docker-compose.dev.yml for development environment compose files, and the default name docker-compose.yml otherwise.

Installing new dependencies is a headache for a development setup like this. One of the better options is to install the new dependency inside the container. So instead of doing e.g. npm install axios, you have to do it in the running container e.g. docker exec hello-front-dev npm install axios, or add it to the package.json and run docker build again.

Communication between containers in a Docker network

The Docker Compose tool sets up a network between the containers and includes a DNS to easily connect two containers. Let's add a new service to the Docker Compose and we shall see how the network and DNS work.

Busybox is a small executable with multiple tools that you may need. It is called "The Swiss Army Knife of Embedded Linux", and we definitely can use it to our advantage.

Busybox can help us to debug our configurations. So if you get lost in the later exercises of this section, you should use Busybox to find out what works and what doesn't. Let's use it to explore what was just said. That the containers are inside a network and you can easily connect between them. Busybox can be added to the mix by changing docker-compose.dev.yml to:

services:

app:

image: hello-front-dev

build:

context: .

dockerfile: dev.Dockerfile

volumes:

- ./:/usr/src/app

ports:

- 5173:5173

container_name: hello-front-dev

debug-helper: image: busyboxThe Busybox container won't have any process running inside so we can not exec in there. Because of that, the output of docker compose up will also look like this:

$ docker compose -f docker-compose.dev.yml up 0.0s

Attaching to front-dev, debug-helper-1

debug-helper-1 exited with code 0

front-dev |

front-dev | > todo-vite@0.0.0 dev

front-dev | > vite --host

front-dev |

front-dev |

front-dev | VITE v5.2.2 ready in 153 msThis is expected as it's just a toolbox. Let's use it to send a request to hello-front-dev and see how the DNS works. While the hello-front-dev is running, we can do the request with wget since it's a tool included in Busybox to send a request from the debug-helper to hello-front-dev.

With Docker Compose we can use docker compose run SERVICE COMMAND to run a service with a specific command. Command wget requires the flag -O with - to output the response to the stdout:

$ docker compose -f docker-compose.dev.yml run debug-helper wget -O - http://app:5173

Connecting to app:5173 (192.168.240.3:5173)

writing to stdout

<!doctype html>

<html lang="en">

<head>

<script type="module">

...The URL is the interesting part here. We simply said to connect to port 5173 of the service app. app is the name of the service specified in the docker-compose.dev.yml file:

services:

app: image: hello-front-dev

build:

context: .

dockerfile: dev.Dockerfile

volumes:

- ./:/usr/src/app

ports:

- 5173:5173 container_name: hello-front-devThe port used is the port from which the application is available in that container, also specified in the docker-compose.dev.yml. The port does not need to be published for other services in the same network to be able to connect to it. The "ports" in the docker-compose file are only for external access.

Let's change the port configuration in the docker-compose.dev.yml to emphasize this:

services:

app:

image: hello-front-dev

build:

context: .

dockerfile: dev.Dockerfile

volumes:

- ./:/usr/src/app

ports:

- 3210:5173 container_name: hello-front-dev

debug-helper:

image: busyboxWith docker compose up the application is available in http://localhost:3210 at the host machine, but the command

docker compose -f docker-compose.dev.yml run debug-helper wget -O - http://app:5173works still since the port is still 5173 within the docker network.

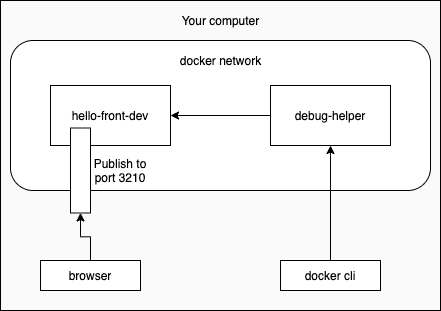

The below image illustrates what happens. The command docker compose run asks debug-helper to send the request within the network. While the browser in the host machine sends the request from outside of the network.

Now that you know how easy it is to find other services in the docker-compose.yml and we have nothing to debug we can remove the debug-helper and revert the ports to 5173:5173 in our compose file.

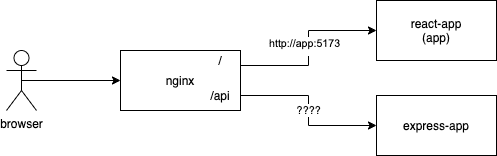

Communications between containers in a more ambitious environment

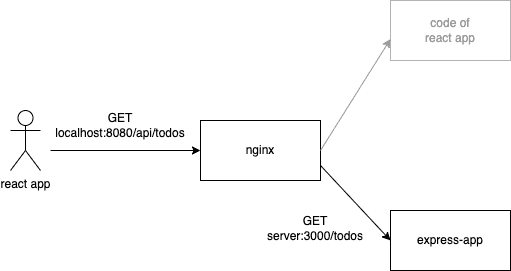

Next, we will configure a reverse proxy to our docker-compose.dev.yml. According to wikipedia

A reverse proxy is a type of proxy server that retrieves resources on behalf of a client from one or more servers. These resources are then returned to the client, appearing as if they originated from the reverse proxy server itself.

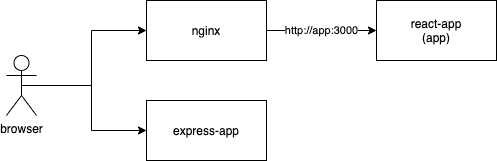

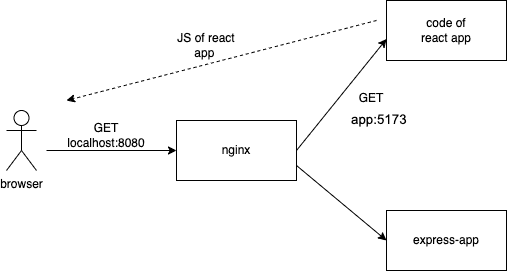

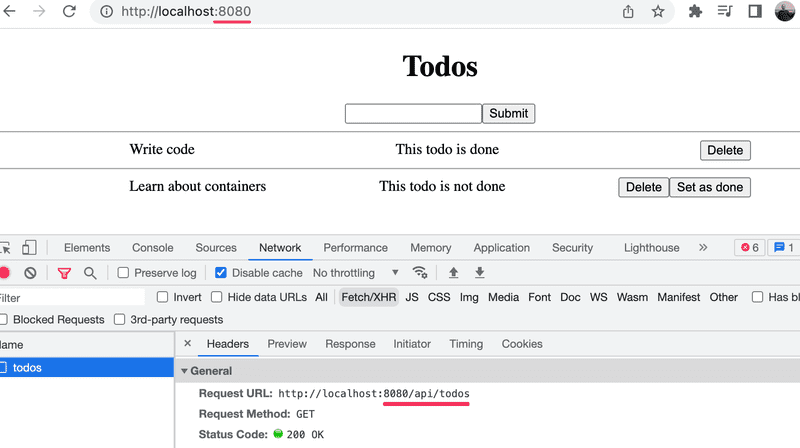

So in our case, the reverse proxy will be the single point of entry to our application, and the final goal will be to set both the React frontend and the Express backend behind the reverse proxy.

There are multiple different options for a reverse proxy implementation, such as Traefik, Caddy, Nginx, and Apache (ordered by initial release from newer to older).

Our pick is Nginx.

Let us now put the hello-frontend behind the reverse proxy.

Create a file nginx.dev.conf in the project root and take the following template as a starting point. We will need to do minor edits to have our application running:

# events is required, but defaults are ok

events { }

# A http server, listening at port 80

http {

server {

listen 80;

# Requests starting with root (/) are handled

location / {

# The following 3 lines are required for the hot loading to work (websocket).

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

# Requests are directed to http://localhost:5173

proxy_pass http://localhost:5173;

}

}

}Note we are using the familiar naming convention also for Nginx, nginx.dev.conf for development configurations, and the default name nginx.conf otherwise.

Next, create an Nginx service in the docker-compose.dev.yml file. Add a volume as instructed in the Docker Hub page where the right side is :/etc/nginx/nginx.conf:ro, the final ro declares that the volume will be read-only:

services:

app:

# ...

nginx:

image: nginx:1.20.1

volumes:

- ./nginx.dev.conf:/etc/nginx/nginx.conf:ro

ports:

- 8080:80

container_name: reverse-proxy

depends_on:

- app # wait for the frontend container to be startedwith that added, we can run docker compose -f docker-compose.dev.yml up and see what happens.

$ docker container ls

CONTAINER ID IMAGE COMMAND PORTS NAMES

a02ae58f3e8d nginx:1.20.1 ... 0.0.0.0:8080->80/tcp reverse-proxy

5ee0284566b4 hello-front-dev ... 0.0.0.0:5173->5173/tcp hello-front-devConnecting to http://localhost:8080 will lead to a familiar-looking page with 502 status.

This is because directing requests to http://localhost:5173 leads to nowhere as the Nginx container does not have an application running in port 5173. By definition, localhost refers to the current computer used to access it. Since the localhost is unique for each container, it always points to the container itself.

Let's test this by going inside the Nginx container and using curl to send a request to the application itself. In our usage curl is similar to wget, but won't need any flags.

$ docker exec -it reverse-proxy bash

root@374f9e62bfa8:\# curl http://localhost:80

<html>

<head><title>502 Bad Gateway</title></head>

...To help us, Docker Compose has set up a network when we ran docker compose up. It has also added all of the containers mentioned in the docker-compose.dev.yml to the network. A DNS makes sure we can find the other containers in the network. The containers are each given two names: the service name and the container name and both can be used to communicate with a container.

Since we are inside the container, we can also test the DNS! Let's curl the service name (app) in port 5173

root@374f9e62bfa8:\# curl http://app:5173

<!doctype html>

<html lang="en">

<head>

<script type="module" src="/@vite/client"></script>

<meta charset="UTF-8" />

<link rel="icon" type="image/svg+xml" href="/vite.svg" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Vite + React</title>

</head>

<body>

<div id="root"></div>

<script type="module" src="/src/main.jsx"></script>

</body>

</html>That is it! Let's replace the proxy_pass address in nginx.dev.conf with that one.

One more thing: we added an option depends_on to the configuration that ensures that the nginx container is not started before the frontend container app is started:

services:

app:

# ...

nginx:

image: nginx:1.20.1

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf:ro

ports:

- 8080:80

container_name: reverse-proxy

depends_on: - appIf we do not enforce the starting order with depends_on there a risk that Nginx fails on startup since it tries to resolve all DNS names that are referred in the config file:

http {

server {

listen 80;

location / {

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_pass http://app:5173; }

}

}Note that depends_on does not guarantee that the service in the depended container is ready for action, it just ensures that the container has been started (and the corresponding entry is added to DNS). If a service needs to wait another service to become ready before the startup, other solutions should be used.

Tools for Production

Containers are fun tools to use in development, but the best use case for them is in the production environment. There are many more powerful tools than Docker Compose to run containers in production.

Heavyweight container orchestration tools like Kubernetes allow us to manage containers on a completely new level. These tools hide away the physical machines and allow us, the developers, to worry less about the infrastructure.

If you are interested in learning more in-depth about containers come to the DevOps with Docker course and you can find more about Kubernetes in the advanced 5 credit DevOps with Kubernetes course. You should now have the skills to complete both of them!